The ULTIMATE guide to Technical SEO for 'dummies'

The phenomenon of Technical SEO could be compared to Moto GP: not only will the best racer win, but also the one with the best technically equipped bike. Thus, it takes endless knowledge and experience to optimize websites for search engines.

Prologue

In the late 1990s, as the Internet became an ever-increasing success, SEO developed its own branch of online marketing. This ensured a good positioning of the websites in the Search Engine Results Pages (SERPs).

The possibilities for optimization were still slim. Generally, it was enough to fill a main page and subpages with certain keywords, both in the meta tags and on the page itself, to rank well for a particular keyword.

At the time, it was very easy to influence rankings with backlinks. In fact, link popularity was a key factor, rather than domain popularity or trust in a website's domain.

Now, with the increasing complexity of search algorithms and increasingly differentiated web search, as well as an exponentially growing number of new websites, the demand for search engine optimization has multiplied.

These are changes that are fundamentally within Technical SEO. Thus, nowadays, this part of search engine optimization has more and more importance. And you're in luck, because we're going to explain everything to you.

Chapter I: Basics

Since this text is a beginner's guide, let's start at the beginning.

What is Technical SEO

Technical SEO is an aspect of SEO focused on helping search engines index a web page and improve its user experience (UX).

From its name, it can be inferred that it is in charge of the technical part of the web, such as the source code, the operating system or the server configurations, among others.

Objectives of Technical SEO

Although there are many specific objectives, as mentioned above, there are two main ones:

1. Get a good position in the SERPs

Although the goal of SEO is to position a keyword so that search engines such as Google or Bing identify the value of the content, one of the goals of Technical SEO is to also highlight the entire website.

By doing a good job in this aspect, the chances of each page of the website being indexed in a good position are greatly increased. To do this, the content must be of quality and follow the SEO instructions both On Page and Off Page.

2. Provide a good user experience

Another goal of technical SEO is to improve the user experience so that they stay longer on the website and reduce the bounce rate.

In this way, loyal and committed users will be achieved with the brand, which helps the digital marketing strategy of the web.

Chapter II: Crawling

Did you know that search engines are like the pigs used to find truffles? They crawl the entire Internet in search of efficient content, but… how do they do it?

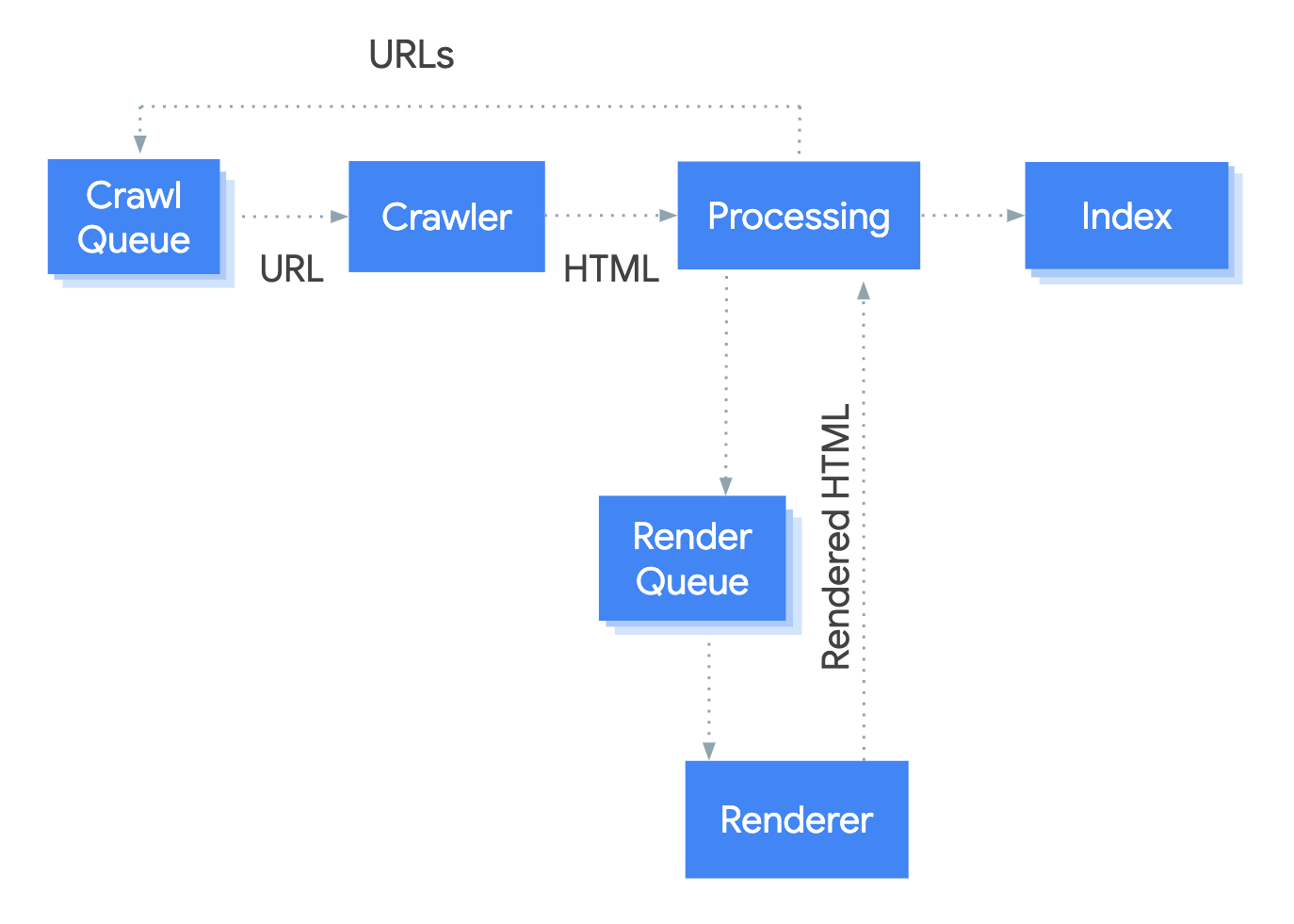

How crawling works

Crawlers gather content from pages and use the links on those pages to search for more pages, allowing content to be found on the web.

Source: Google

Sources URL

Every crawler has to start somewhere. Normally, they create lists with the URLs found through links on pages, but they also use sitemaps that have lists of pages.

Crawl queue

All URLs that need to be crawled or recrawled are given priority and put in the crawl queue. This is an ordered list of URLs that the search engine wants to crawl.

Tracker

It is the system that collects the content of the pages.

Processing systems

These are various systems in charge of managing the canonicalization, of sending the pages to the renderer that loads the same as a browser and of processing it to get more URLs to track.

Renderer

It is responsible for loading a page like browsers with JavaScript and CSS so that Google can see what most users see.

Index

These are the stored pages that Google shows to users.

Tracking controls

There are several ways to control what is tracked on a website.

Robots.txt

The robots.txt file is what tells search engines where they can and cannot go on a site.

Of course, keep in mind that Google can index pages that they cannot crawl if the links point to those pages.

Crawl Frequency

There is a crawl delay directive that can be used in robots.txt that many crawlers support and allows you to set how often they can crawl pages.

Unfortunately, Google doesn't honor this, so you have to change the crawl frequency in Google Search Console.

Access restrictions

For the page to be accessible to some users but not to search engines, there are three alternatives:

- Some access systems.

- HTTP authentication (with password to access).

- Trusted list of IPs (only allows certain IP addresses to access the pages).

These configurations are ideal for internal networks, members-only content, or test/development sites, among others.

Thus, they allow a group of users to enter the pages, but search engines will not be able to access or index them.

Viewing crawl activity

The easiest way for Google to see what's being crawled is Crawl Stats report, which provides more information about how a website is crawling.

Of course, to be able to see all the tracking activity on the web first-hand, it is necessary to access the server logs and perhaps use a tool to better analyze the data.

Crawl settings

Every website has a crawl budget, which is a combination of how often Google wants to crawl it and how much crawling it allows.

The most popular pages and those that change frequently will be crawled more frequently than those that Google does not seem popular or well linked to.

In the event that crawlers see signs when crawling the website (for example, that it is running slower than usual), they will typically slow down or stop crawling until the situation improves.

When the pages have been crawled, they are rendered and sent to the index, i.e. the master list of pages that can be returned for search queries.

Chapter III: Indexing

Logically, none of the above would be useful if we do not make sure that the pages are indexed or check how they are being indexed.

Robot directives

The robots meta tag is a piece of HTML that tells search engines how to crawl or index a specific page. It is located in the section of a web page and looks like this:

Canonicalization

If there are different versions of the same page, Google chooses one to keep in its index through a process called canonicalization. Thus, the URL selected as canonical will be the one seen in the search engine results. There are several different ways used to choose the canonical URL, such as the following:

- Internal links

- Canonical tags

- Duplicate pages

- Redirects Sitemap

- URLs

The easiest way to check how Google has indexed a page is to use the URL inspection tool in Google Search Console, where the canonical URL chosen by Google is displayed.

Chapter IV: Priorities

Although there are many best practices, not all have the same impact on rankings or traffic. The key word here is PRIORITIZATION.

Check indexing

Make sure that the pages you want users to find can be indexed by Google. For this there are different free tools such as Site Audit (as long as we are the owners of the website).

Claim missing links

Web pages often change their URLs over the years. In many cases, these old URLs have links from other websites.

If they are not redirected to the current pages, those links are lost and no longer count towards the pages.

It's not too late to do these redirects and any lost value can be reclaimed very quickly. It is something like a enhanced link building.

Interlinking

Internal links (or interlinking) are those links from one page on a website to another on the same site, something that helps them to be found and to position themselves better.

Add Schema markup

Schema markup is code that helps search engines better understand your content and activates many features that can help your site stand out from the rest.

Google has a search gallery that offers the different search functionalities and the necessary Schema to be selectable.

Chapter V: Extra techniques

There are different strategies that need more work and give less benefit than the previous ones, but they are just as useful and should not be left aside.

Page experience signals

These are lesser known aspects, but to which we must pay attention for the good of users because they cover aspects that impact the user experience (UX).

Core Web Vitals

The Core Web Vitals are three speed metrics that are part of Google's Page Experience signals used to measure UX:

- Largest Contentful Paint (LCP): Measures load performance.

- First Input Delay (FID): Measures interactivity.

- Cumulative Layout Shift (CLS): measures visual stability.

HTTPS

The HTTPS protocol is responsible for protecting the communication between the browser and the server to prevent it from being intercepted and corrupted by attackers.

This gives confidentiality, integrity, and authentication to the vast majority of network traffic today.

Any website that has a lock icon in the address bar is using the HTTPS protocol.

Mobile optimization (mobile friendliness)

This is a check of whether web pages are displayed properly and are easily used by users entering from mobile devices.

To find out how mobile-friendly a website is, just check the Mobile Usability in Google Search Console, which indicates potential mobile optimization issues.

Safe browsing

Simply put, these are checks used to ensure that pages are not deceptive, malware, or potentially harmful downloads.

Interstitials

These are popups (emerging windows) that cover the main content and prevent users from seeing it, having to interact with them before they disappear.

Hreflang for multilingual websites

This is an HTML attribute used to specify the language and geographic scope of a web page.

So, if a website is in different languages, you can use this hreflang tag to tell search engines that there are different variants.

In addition, it also serves to indicate if a website is in the same language for different geographical areas (for example, it is for Spain and es-mx for Mexico).

With this simple tag, search engines will show the correct version to users.

General maintenance (website health)

There are different techniques that should be used to improve the UX and affect positioning as little as possible.

Broken links

As the name suggests, these are links that give 404 errors and point to nonexistent resources, whether internal or external.

Redirect chains

The series of redirects that pass between the initial URL and the URL destination are called redirect chains.

Chapter VI: Technical SEO Tools

All SEOs worth their salt need the best tools to improve the technical aspects of their website.

Google Search Console

Google Search Console is a free service that helps monitor and resolve appearance issues on search results pages (SERPs).

It is also very useful for finding and resolving technical errors, submitting sitemaps, viewing data issues, and more.

Google’s Mobile-Friendly Test

The Google’s Mobile-Friendly Test allows you to test how easy a website is to use on a mobile device, as well as see what Google sees when it crawls the page.

In addition, with it you can find specific mobile usability problems, such as text too small to be read, the use of incompatible plugins and much more.

You can also use theRich Snippets to see what Google sees on desktop or mobile.

Chrome Developer Tools

Chrome Developer Tools (or Chrome DevTools) allow you to debug errors on web pages, such as page speed issues, improve page rendering performance, and so on. They are a real blessing for any SEO.

PageSpeed Insights

PageSpeed Insights focuses on analyzing the loading speed of web pages, displaying performance score, actionable recommendations to make page load faster, etc.

Epilogue

Although everything seen in this guide is just the tip of the iceberg of Technical SEO, this should help you with the basics of it and delve into both SEO On Page and SEO Off Page. In fact, these three cooperate to get organic traffic.

Although the on and off are the ones that are implemented first, Technical SEO is of vital importance to position a website at the top of the SERPs. Do not hesitate to use these techniques to complete your SEO strategy and achieve results.

However, keep in mind that Technical SEO is not something that can be mastered in a day or two: it requires a lot of dedicated research and a lot of trial and error. However, there is good news: you don't have to do it alone.

And it is that if you want your website or blog not to lack any detail and to be perfect to be tracked, do not hesitate to contact Coco Solution. We will make sure that you rise to the top of the search engine results pages!

![Mejores agencias de desarrollo web en Chile [2025]](/cms/uploads/mejores_agencias_desarrollo_web_chile_2025-1200-swxn0q.png)